引言

神经网络实验往往需要编写大量代码、调试参数、手动可视化结果,对于初学者而言门槛较高。传统的命令行式实验方式不仅操作繁琐,还难以直观展示算法效果。本文基于Gradio框架,构建了一个集经典神经网络(感知器、BP、Hopfield) 和深度学习模型(AlexNet/VGG/ResNet) 于一体的可视化 GUI 实验平台,无需编写代码,仅通过界面交互即可完成各类神经网络实验,大幅降低了实验门槛。

本平台支持以下核心实验:

- 感知器二分类实验

- BP 神经网络非线性函数拟合实验

- Hopfield 网络图像恢复实验

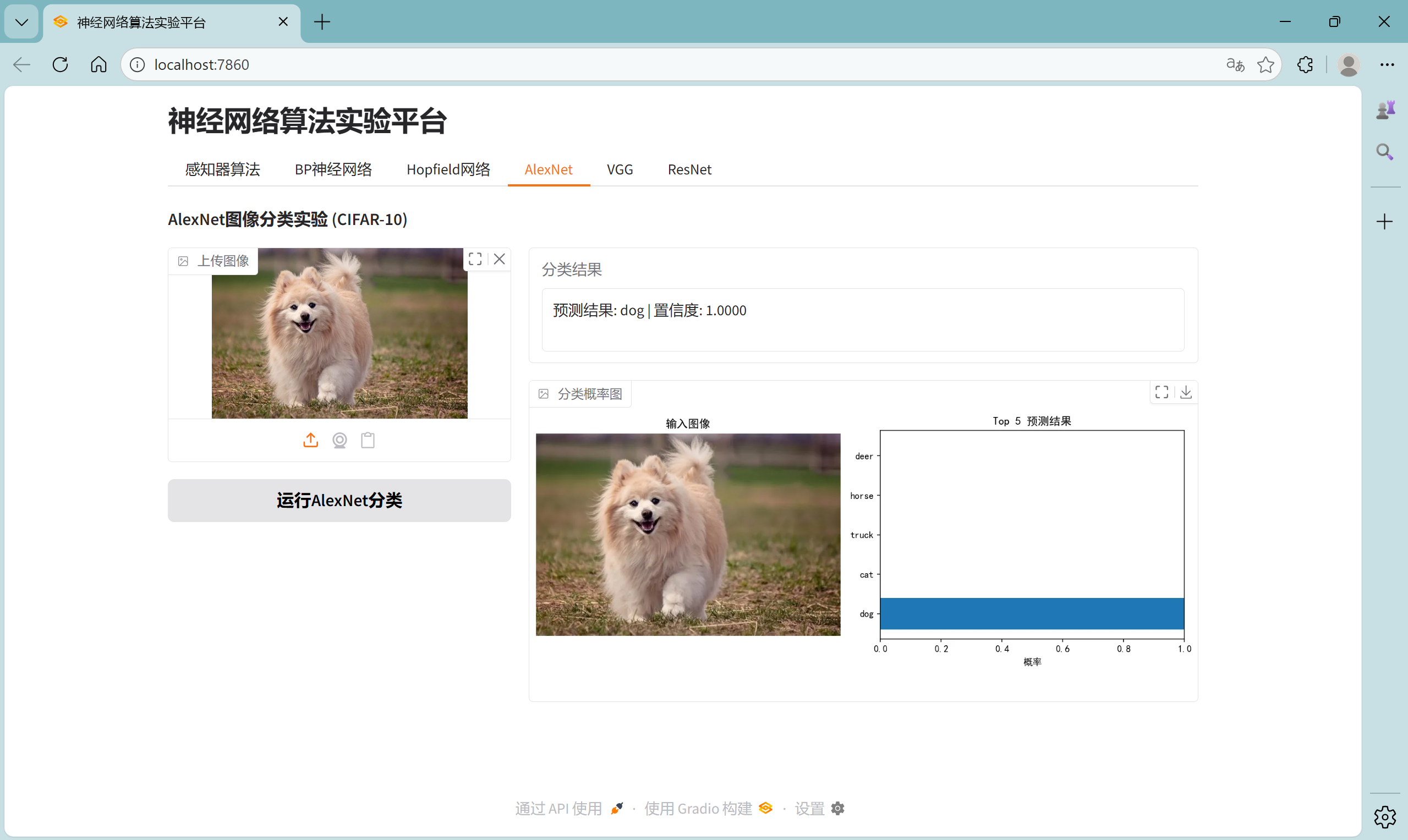

- AlexNet/VGG/ResNet 图像分类实验(CIFAR-10)

所有实验均集成在统一的 GUI 界面中,支持参数调节、实时可视化、结果导出,适合教学演示、自学验证、算法对比等场景。

一、平台核心特性

| 实验模块 | 核心功能 | 交互方式 | 可视化输出 |

|---|---|---|---|

| 感知器算法 | 二分类实验,支持调节学习率、最大迭代次数 | 滑块调节参数 | 分类散点图、决策线、准确率 |

| BP 神经网络 | 非线性函数拟合,支持调节隐藏层神经元数、学习率、迭代次数、目标损失 | 多滑块参数调节 | 原始数据 / 拟合曲线对比、损失下降曲线、网络参数 |

| Hopfield 网络 | 图像恢复实验,支持调节噪声水平 | 噪声滑块 | 原图 / 带噪声图 / 恢复图 |

| AlexNet/VGG/ResNet | CIFAR-10 图像分类 | 上传图像 | 分类结果、Top5 概率条形图 |

二、技术栈与核心依赖

1. 核心依赖库

1# 基础环境 2python >= 3.8 3# 核心库 4gradio >= 4.0 # GUI界面构建 5torch >= 2.0 # 深度学习模型 6torchvision >= 0.15 # 预训练模型 7numpy >= 1.24 # 数值计算 8matplotlib >= 3.7 # 可视化 9Pillow >= 9.0 # 图像处理 10

2. 依赖安装命令

1pip install gradio torch torchvision numpy matplotlib pillow 2

三、核心代码解析

1. 整体架构设计

平台采用 Gradio 的Blocks布局,通过Tabs分模块管理不同实验,核心架构如下:

1def create_interface(): 2 with gr.Blocks(title="神经网络算法实验平台") as demo: 3 gr.Markdown("# 神经网络算法实验平台") 4 with gr.Tabs(): 5 # 感知器实验Tab 6 with gr.Tab("感知器算法"): ... 7 # BP神经网络Tab 8 with gr.Tab("BP神经网络"): ... 9 # Hopfield网络Tab 10 with gr.Tab("Hopfield网络"): ... 11 # 深度学习模型Tabs(AlexNet/VGG/ResNet) 12 with gr.Tab("AlexNet"): ... 13 with gr.Tab("VGG"): ... 14 with gr.Tab("ResNet"): ... 15 return demo 16

2. 经典神经网络模块解析

(1)感知器算法模块

感知器是最简单的二分类模型,核心实现包括数据生成、模型训练、可视化:

1class Perceptron: 2 def __init__(self, learning_rate=0.01, max_epochs=100): 3 self.lr = learning_rate 4 self.max_epochs = max_epochs 5 self.w = None # 权重 6 self.b = 0 # 偏置 7 8 def activate(self, z): 9 return 1 if z >= 0 else -1 # 阶跃激活函数 10 11 def train(self, X, y): 12 y_sym = np.where(y == 0, -1, 1) # 标签转换为±1 13 n_samples, n_features = X.shape 14 self.w = np.zeros(n_features) 15 for epoch in range(self.max_epochs): 16 updated = False 17 for i in range(n_samples): 18 y_pred = self.predict(X[i]) 19 if y_pred != y_sym[i]: 20 # 权重更新规则 21 self.w += self.lr * (y_sym[i] - y_pred) * X[i] 22 self.b += self.lr * (y_sym[i] - y_pred) 23 updated = True 24 if not updated: # 无更新则提前停止 25 break 26

交互逻辑:通过滑块调节学习率和最大迭代次数,点击按钮触发训练,实时输出分类准确率和可视化结果。

(2)BP 神经网络模块

BP 神经网络用于非线性函数拟合,采用 PyTorch 实现全连接网络,核心结构:

1class BPNetwork(nn.Module): 2 def __init__(self, hidden_size=10): 3 super(BPNetwork, self).__init__() 4 self.hidden_layer = nn.Linear(1, hidden_size) # 输入层→隐藏层 5 self.output_layer = nn.Linear(hidden_size, 1) # 隐藏层→输出层 6 7 def forward(self, x): 8 hidden_out = torch.tanh(self.hidden_layer(x)) # tanh激活 9 output_out = torch.tanh(self.output_layer(hidden_out)) 10 return output_out 11

核心优化:

- 采用 Adam 优化器加速收敛

- 支持提前停止(达到目标损失则终止训练)

- 双图可视化(原始数据 + 拟合曲线、损失下降曲线)

(3)Hopfield 网络模块

Hopfield 网络是经典的递归神经网络,用于联想记忆和图像恢复,核心实现:

1class HopfieldNetwork: 2 def __init__(self, num_neurons): 3 self.num_neurons = num_neurons 4 self.weights = np.zeros((num_neurons, num_neurons)) 5 6 def train(self, patterns): 7 # Hebbian学习规则训练权重 8 for i in range(self.num_neurons): 9 for j in range(self.num_neurons): 10 if i != j: 11 self.weights[i, j] = (1/self.num_neurons) * np.sum(patterns[:,i] * patterns[:,j]) 12 13 def predict(self, pattern, max_iter=100): 14 # 异步更新规则恢复图案 15 current = pattern.copy() 16 for _ in range(max_iter): 17 order = np.random.permutation(self.num_neurons) 18 new_state = current.copy() 19 for idx in order: 20 activation = np.dot(self.weights[idx,:], current) 21 new_state[idx] = 1 if activation >=0 else -1 22 if np.array_equal(new_state, current): 23 break 24 current = new_state 25 return current 26

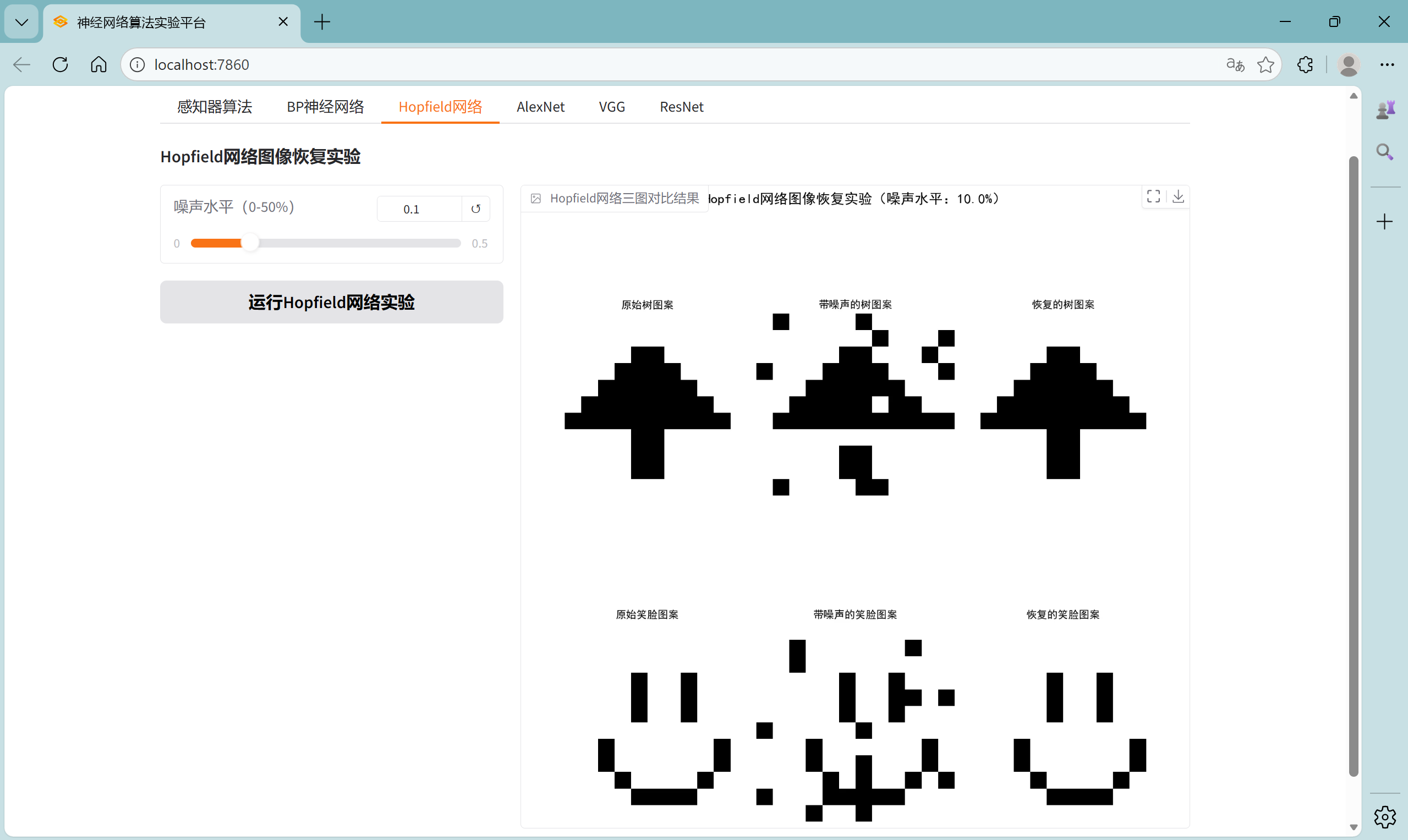

特色功能:自定义生成树和笑脸图案,支持不同噪声水平的图像恢复实验,三图对比直观展示恢复效果。

3. 深度学习模型模块解析

(1)模型加载与适配

统一封装 AlexNet/VGG/ResNet 的加载逻辑,适配 CIFAR-10 分类任务:

1def load_model(model_name): 2 device = torch.device("cuda" if torch.cuda.is_available() else "cpu") 3 if model_name == "AlexNet": 4 model = torchvision.models.alexnet(weights=None) 5 in_features = model.classifier[6].in_features 6 model.classifier[6] = torch.nn.Linear(in_features, len(CLASSES)) 7 elif model_name == "ResNet": 8 model = torchvision.models.resnet18(weights=None) 9 in_features = model.fc.in_features 10 model.fc = torch.nn.Sequential(torch.nn.Dropout(0.5), 11 torch.nn.Linear(in_features, len(CLASSES))) 12 elif model_name == "VGG": 13 model = torchvision.models.vgg16(weights=None) 14 in_features = model.classifier[6].in_features 15 model.classifier[6] = torch.nn.Sequential(torch.nn.Dropout(0.5), 16 torch.nn.Linear(in_features, len(CLASSES))) 17 # 加载预训练权重 18 if os.path.exists(MODEL_PATHS[model_name]): 19 model.load_state_dict(torch.load(MODEL_PATHS[model_name], map_location=device)) 20 model.to(device) 21 model.eval() 22 return model, device 23

(2)预测与可视化

上传图像后,自动预处理并输出分类结果 + Top5 概率条形图:

1def predict_image(model_name, image): 2 model, device = load_model(model_name) 3 transform = get_transform(model_name) 4 # 预处理 5 img = Image.fromarray(image).convert("RGB") 6 img_tensor = transform(img).unsqueeze(0).to(device) 7 # 预测 8 with torch.no_grad(): 9 outputs = model(img_tensor) 10 probs = torch.softmax(outputs, dim=1) 11 conf, pred = torch.max(probs, 1) 12 # 可视化 13 fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(10, 4)) 14 ax1.imshow(image), ax1.set_title("输入图像"), ax1.axis('off') 15 # Top5概率条形图 16 top_n = 5 17 top_probs, top_indices = torch.topk(probs, top_n) 18 ax2.barh([CLASSES[i] for i in top_indices.cpu().numpy()[0]], 19 top_probs.cpu().numpy()[0]) 20 ax2.set_xlabel("概率"), ax2.set_title(f"Top {top_n} 预测结果") 21 # 保存并返回 22 buf = io.BytesIO() 23 plt.savefig(buf, format='png', bbox_inches='tight', dpi=150) 24 buf.seek(0) 25 result_img = Image.open(buf) 26 return f"预测结果: {CLASSES[pred.item()]} | 置信度: {conf.item():.4f}", result_img 27

4. 中文显示优化

解决 Matplotlib 中文乱码问题:

1plt.rcParams["font.family"] = ["SimHei"] # 设置中文字体 2plt.rcParams['axes.unicode_minus'] = False # 解决负号显示问题 3

四、平台使用指南

1. 环境准备

1# 1. 安装依赖 2pip install gradio torch torchvision numpy matplotlib pillow 3# 2. 准备模型文件(需提前训练好以下文件,放在同目录) 4# best_alexnet_cifar10.pth 5# best_resnet_cifar10.pth 6# best_vgg16_cifar10_final.pth 7

2. 启动平台

1if __name__ == "__main__": 2 demo = create_interface() 3 demo.launch(server_name="localhost", server_port=7860, share=False) 4

启动后访问 http://localhost:7860 即可进入 GUI 界面。

3. 各模块使用方法

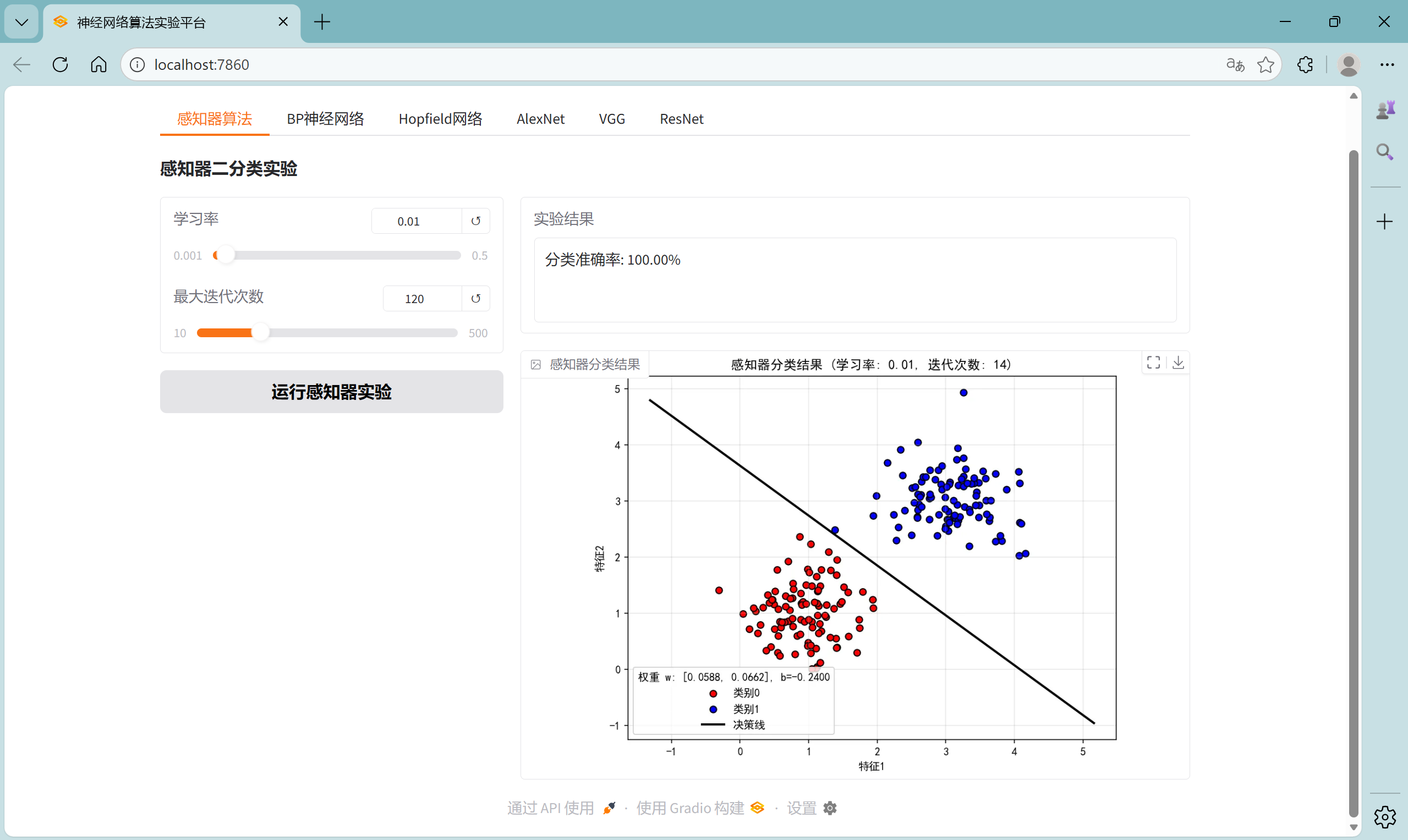

(1)感知器算法实验

- 调节 “学习率”(0.001-0.5)和 “最大迭代次数”(10-500)滑块;

- 点击 “运行感知器实验”;

- 查看右侧 “实验结果”(准确率)和 “感知器分类结果” 图(含决策线、权重信息)。

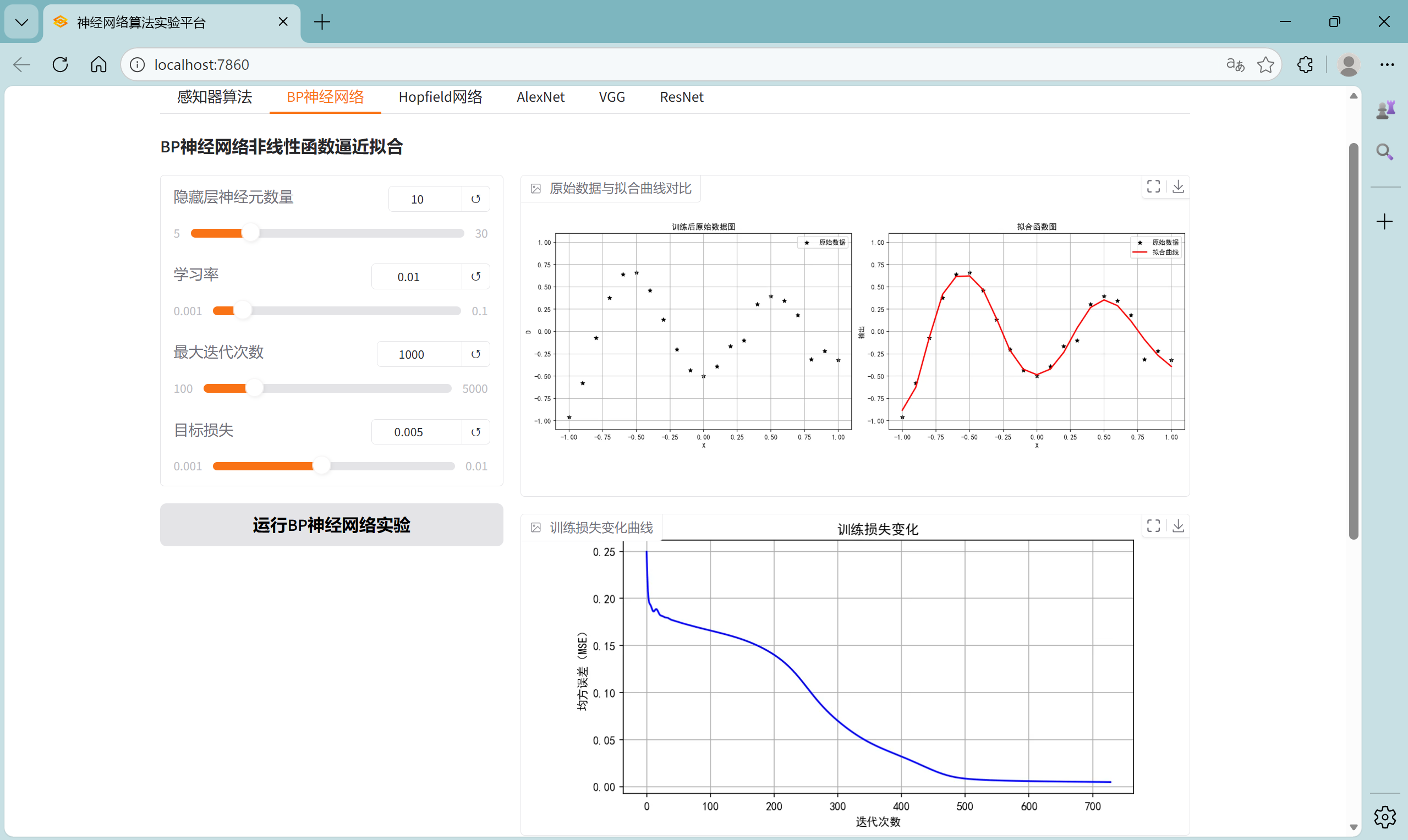

(2)BP 神经网络实验

- 调节 “隐藏层神经元数量”(5-30)、“学习率”(0.001-0.1)、“最大迭代次数”(100-5000)、“目标损失”(0.001-0.01);

- 点击 “运行 BP 神经网络实验”;

- 查看 “原始数据与拟合曲线对比”“训练损失变化曲线” 和 “网络参数与训练结果”。

(3)Hopfield 网络实验

- 调节 “噪声水平”(0-50%)滑块;

- 点击 “运行 Hopfield 网络实验”;

- 查看三图对比结果(原图 / 带噪声图 / 恢复图)。

(4)深度学习模型分类实验

- 切换到对应模型 Tab(AlexNet/VGG/ResNet);

- 点击 “上传图像” 按钮,选择本地图像;

- 点击 “运行 XXX 分类”;

- 查看分类结果和 Top5 概率条形图。

五、实验效果展示

1. 感知器实验效果

2. BP 神经网络实验效果

3. Hopfield 网络实验效果

4. AlexNet 分类实验效果

5. VGG 分类实验效果

6. ResNet 分类实验效果

六、代码优化与扩展方向

1. 现有优化点

- 跨设备适配:自动检测 CPU/GPU,模型加载时自动映射设备;

- 提前停止机制:BP 网络和感知器支持提前停止,避免无效迭代;

- 可视化优化:所有图像保存到内存缓冲区,避免磁盘 IO;

- 中文支持:解决 Matplotlib 中文乱码问题,提升界面友好性。

2. 扩展方向

- 模型训练功能:增加在线训练深度学习模型的功能,无需提前准备权重文件;

- 更多经典网络:增加 RBF、CNN 等网络模块;

- 结果导出:支持将实验结果(图像、参数)导出为 PDF/PNG 文件;

- 批量预测:支持深度学习模型批量图像分类;

- 多语言支持:增加英文界面选项;

- 云端部署:通过 Gradio 的

share=True实现公网访问,支持远程实验。

七、完整代码

1import gradio as gr 2import numpy as np 3import matplotlib.pyplot as plt 4import torch 5import torchvision 6from torchvision import transforms 7from PIL import Image 8import os 9import io 10import torch.nn as nn 11import torch.optim as optim 12 13# 设置中文字体 14plt.rcParams["font.family"] = ["SimHei"] 15plt.rcParams['axes.unicode_minus'] = False # 解决负号显示问题 16 17# ====================== 通用配置 ====================== 18# 模型文件路径 19MODEL_PATHS = { 20 "AlexNet": "best_alexnet_cifar10.pth", 21 "ResNet": "best_resnet_cifar10.pth", 22 "VGG": "best_vgg16_cifar10_final.pth" 23} 24 25# CIFAR-10类别 26CLASSES = ['airplane', 'automobile', 'bird', 'cat', 'deer', 27 'dog', 'frog', 'horse', 'ship', 'truck'] 28 29 30# ====================== 1. 深度学习模型(AlexNet/VGG/ResNet) ====================== 31# 数据预处理 32def get_transform(model_name): 33 if model_name == "VGG": 34 return transforms.Compose([ 35 transforms.Resize((144, 144)), 36 transforms.ToTensor(), 37 transforms.Normalize(mean=[0.4914, 0.4822, 0.4465], std=[0.2470, 0.2435, 0.2616]) 38 ]) 39 else: 40 return transforms.Compose([ 41 transforms.Resize((224, 224)), 42 transforms.ToTensor(), 43 transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]) 44 ]) 45 46 47# 加载模型 48def load_model(model_name): 49 device = torch.device("cuda" if torch.cuda.is_available() else "cpu") 50 51 if model_name == "AlexNet": 52 model = torchvision.models.alexnet(weights=None) 53 in_features = model.classifier[6].in_features 54 model.classifier[6] = torch.nn.Linear(in_features, len(CLASSES)) 55 56 elif model_name == "ResNet": 57 model = torchvision.models.resnet18(weights=None) 58 in_features = model.fc.in_features 59 model.fc = torch.nn.Sequential(torch.nn.Dropout(0.5), 60 torch.nn.Linear(in_features, len(CLASSES))) 61 62 elif model_name == "VGG": 63 model = torchvision.models.vgg16(weights=None) 64 in_features = model.classifier[6].in_features 65 model.classifier[6] = torch.nn.Sequential(torch.nn.Dropout(0.5), 66 torch.nn.Linear(in_features, len(CLASSES))) 67 else: 68 raise ValueError(f"未知模型: {model_name}") 69 70 # 加载模型权重 71 if os.path.exists(MODEL_PATHS[model_name]): 72 model.load_state_dict(torch.load(MODEL_PATHS[model_name], map_location=device)) 73 else: 74 print(f"警告: 未找到模型文件 {MODEL_PATHS[model_name]},使用随机权重") 75 76 model.to(device) 77 model.eval() 78 return model, device 79 80 81# 预测函数 82def predict_image(model_name, image): 83 if image is None: 84 return "请上传一张图片", None 85 86 model, device = load_model(model_name) 87 transform = get_transform(model_name) 88 89 # 预处理图像 90 img = Image.fromarray(image).convert("RGB") 91 img_tensor = transform(img).unsqueeze(0).to(device) 92 93 # 预测 94 with torch.no_grad(): 95 outputs = model(img_tensor) 96 probs = torch.softmax(outputs, dim=1) 97 conf, pred = torch.max(probs, 1) 98 99 # 绘制结果 100 fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(10, 4)) 101 102 # 显示输入图像 103 ax1.imshow(image) 104 ax1.set_title("输入图像") 105 ax1.axis('off') 106 107 # 显示概率条形图 108 top_n = 5 109 top_probs, top_indices = torch.topk(probs, top_n) 110 ax2.barh([CLASSES[i] for i in top_indices.cpu().numpy()[0]], 111 top_probs.cpu().numpy()[0]) 112 ax2.set_xlabel("概率") 113 ax2.set_title(f"Top {top_n} 预测结果") 114 ax2.set_xlim(0, 1) 115 116 plt.tight_layout() 117 118 # 保存到缓冲区 119 buf = io.BytesIO() 120 plt.savefig(buf, format='png', bbox_inches='tight', dpi=150) 121 buf.seek(0) 122 result_img = Image.open(buf) 123 124 return f"预测结果: {CLASSES[pred.item()]} | 置信度: {conf.item():.4f}", result_img 125 126 127# ====================== 2. Hopfield网络 ====================== 128class HopfieldNetwork: 129 def __init__(self, num_neurons): 130 self.num_neurons = num_neurons 131 self.weights = np.zeros((num_neurons, num_neurons)) 132 133 def train(self, patterns): 134 for i in range(self.num_neurons): 135 for j in range(self.num_neurons): 136 if i != j: 137 self.weights[i, j] = (1 / self.num_neurons) * np.sum(patterns[:, i] * patterns[:, j]) 138 139 def predict(self, pattern, max_iter=100): 140 current = pattern.copy() 141 for _ in range(max_iter): 142 order = np.random.permutation(self.num_neurons) 143 new_state = current.copy() 144 for idx in order: 145 activation = np.dot(self.weights[idx, :], current) 146 new_state[idx] = 1 if activation >= 0 else -1 147 if np.array_equal(new_state, current): 148 break 149 current = new_state 150 return current 151 152 153def create_patterns(size=12): 154 """创建树和笑脸模式""" 155 tree = np.ones((size, size)) * -1 156 smile = np.ones((size, size)) * -1 157 158 # 树图案 159 for i in range(5): # 增加树冠层数 160 tree[i + 2, (5 - i):(7 + i)] = 1 # 调整位置使其居中 161 tree[6:10, 5:7] = 1 # 调整树干位置 162 163 # 笑脸图案 164 smile[3:6, 5] = smile[3:6, 8] = 1 # 眼睛 165 smile[7:9, [3, 10]] = 1 166 smile[9, [4, 9]] = 1 167 smile[10, 5:9] = 1 # 嘴巴中间部分 168 169 return tree, smile 170 171 172def add_noise(pattern, noise_level): 173 """添加指定比例的噪声""" 174 noisy = pattern.copy() 175 pixels = pattern.size 176 flip_count = int(noise_level * pixels) 177 indices = np.random.choice(pixels, flip_count, replace=False) 178 rows, cols = pattern.shape 179 for idx in indices: 180 r, c = idx // cols, idx % cols 181 noisy[r, c] *= -1 182 return noisy 183 184 185def hopfield_demo(noise_level): 186 """Hopfield网络演示:显示原图、带噪声图、恢复图的三图对比""" 187 img_size = 12 188 tree, smile = create_patterns(img_size) 189 190 # 训练网络 191 patterns = np.array([tree.flatten(), smile.flatten()]) 192 net = HopfieldNetwork(img_size * img_size) 193 net.train(patterns) 194 195 # 对两个图案都添加噪声并恢复 196 noisy_tree = add_noise(tree, noise_level) 197 recovered_tree = net.predict(noisy_tree.flatten()).reshape(img_size, img_size) 198 199 noisy_smile = add_noise(smile, noise_level) 200 recovered_smile = net.predict(noisy_smile.flatten()).reshape(img_size, img_size) 201 202 # 可视化结果 203 fig, axes = plt.subplots(2, 3, figsize=(10, 12)) 204 fig.suptitle(f'Hopfield网络图像恢复实验(噪声水平:{noise_level * 100}%)', fontsize=16, fontweight='bold') 205 206 # 第一行:树 207 axes[0, 0].imshow(tree, cmap='binary') 208 axes[0, 0].set_title('原始树图案', fontsize=12) 209 axes[0, 0].axis('off') 210 211 axes[0, 1].imshow(noisy_tree, cmap='binary') 212 axes[0, 1].set_title(f'带噪声的树图案', fontsize=12) 213 axes[0, 1].axis('off') 214 215 axes[0, 2].imshow(recovered_tree, cmap='binary') 216 axes[0, 2].set_title('恢复的树图案', fontsize=12) 217 axes[0, 2].axis('off') 218 219 # 第二行:笑脸 220 axes[1, 0].imshow(smile, cmap='binary') 221 axes[1, 0].set_title('原始笑脸图案', fontsize=12) 222 axes[1, 0].axis('off') 223 224 axes[1, 1].imshow(noisy_smile, cmap='binary') 225 axes[1, 1].set_title(f'带噪声的笑脸图案', fontsize=12) 226 axes[1, 1].axis('off') 227 228 axes[1, 2].imshow(recovered_smile, cmap='binary') 229 axes[1, 2].set_title('恢复的笑脸图案', fontsize=12) 230 axes[1, 2].axis('off') 231 232 plt.tight_layout() 233 234 # 保存到缓冲区 235 buf = io.BytesIO() 236 plt.savefig(buf, format='png', bbox_inches='tight', dpi=150) 237 buf.seek(0) 238 result_img = Image.open(buf) 239 240 return result_img 241 242 243# ====================== 3. 感知器算法 ====================== 244class Perceptron: 245 def __init__(self, learning_rate=0.01, max_epochs=100): 246 self.lr = learning_rate 247 self.max_epochs = max_epochs 248 self.w = None 249 self.b = 0 250 self.final_w = None 251 self.final_b = 0 252 253 def activate(self, z): 254 return 1 if z >= 0 else -1 255 256 def predict(self, x): 257 return self.activate(np.dot(x, self.w) + self.b) 258 259 def train(self, X, y): 260 y_sym = np.where(y == 0, -1, 1) 261 n_samples, n_features = X.shape 262 self.w = np.zeros(n_features) 263 self.b = 0 264 epoch_params = [] 265 266 for epoch in range(self.max_epochs): 267 updated = False 268 for i in range(n_samples): 269 y_pred = self.predict(X[i]) 270 if y_pred != y_sym[i]: 271 self.w += self.lr * (y_sym[i] - y_pred) * X[i] 272 self.b += self.lr * (y_sym[i] - y_pred) 273 updated = True 274 epoch_params.append((self.w.copy(), self.b.copy())) 275 if not updated: 276 break 277 278 self.final_w, self.final_b = self.w.copy(), self.b.copy() 279 return epoch_params 280 281 282def generate_data(n_samples=100, seed=42): 283 np.random.seed(seed) 284 # 生成两类可分离的数据 285 class0 = np.random.randn(n_samples, 2) * 0.5 + np.array([1, 1]) 286 class1 = np.random.randn(n_samples, 2) * 0.5 + np.array([3, 3]) 287 X = np.vstack((class0, class1)) 288 y = np.hstack((np.zeros(n_samples), np.ones(n_samples))) 289 return X, y 290 291 292def perceptron_demo(learning_rate, max_epochs): 293 X, y = generate_data() 294 perceptron = Perceptron(learning_rate=learning_rate, max_epochs=int(max_epochs)) 295 epoch_params = perceptron.train(X, y) 296 297 # 绘制结果 298 fig, ax = plt.subplots(figsize=(8, 6)) 299 300 # 绘制样本点 301 ax.scatter(X[y == 0][:, 0], X[y == 0][:, 1], c='red', label='类别0', edgecolors='k') 302 ax.scatter(X[y == 1][:, 0], X[y == 1][:, 1], c='blue', label='类别1', edgecolors='k') 303 304 # 绘制最终决策线 305 x1 = np.linspace(X[:, 0].min() - 1, X[:, 0].max() + 1, 100) 306 if abs(perceptron.final_w[1]) > 1e-6: 307 x2 = (-perceptron.final_w[0] * x1 - perceptron.final_b) / perceptron.final_w[1] 308 else: 309 x1 = [-perceptron.final_b / (perceptron.final_w[0] + 1e-6)] * 100 310 x2 = np.linspace(X[:, 1].min() - 1, X[:, 1].max() + 1, 100) 311 ax.plot(x1, x2, 'k-', linewidth=2, label='决策线') 312 313 ax.set_xlabel('特征1') 314 ax.set_ylabel('特征2') 315 ax.set_title(f'感知器分类结果 (学习率: {learning_rate}, 迭代次数: {len(epoch_params)})') 316 ax.legend(title=f'权重 w: [{perceptron.final_w[0]:.4f}, {perceptron.final_w[1]:.4f}], b={perceptron.final_b:.4f}') 317 ax.grid(alpha=0.3) 318 319 # 保存到缓冲区 320 buf = io.BytesIO() 321 plt.savefig(buf, format='png', bbox_inches='tight', dpi=150) 322 buf.seek(0) 323 result_img = Image.open(buf) 324 325 # 计算准确率 326 y_sym = np.where(y == 0, -1, 1) 327 y_pred = np.apply_along_axis(perceptron.predict, 1, X) 328 accuracy = np.mean(y_pred == y_sym) 329 330 return f"分类准确率: {accuracy:.2%}", result_img 331 332 333# ====================== 4. BP神经网络 ====================== 334class BPNetwork(nn.Module): 335 def __init__(self, hidden_size=10): 336 super(BPNetwork, self).__init__() 337 self.hidden_layer = nn.Linear(in_features=1, out_features=hidden_size) # 1→hidden_size 338 self.output_layer = nn.Linear(in_features=hidden_size, out_features=1) # hidden_size→1 339 340 def forward(self, x): 341 hidden_out = torch.tanh(self.hidden_layer(x)) # 隐含层tansig激活 342 output_out = torch.tanh(self.output_layer(hidden_out)) # 输出层tansig激活 343 return output_out 344 345 346def train_bp_network(hidden_size, learning_rate, max_epochs, target_loss): 347 # 准备实验数据 348 X = [ 349 -1.0, -0.9, -0.8, -0.7, -0.6, -0.5, -0.4, 350 -0.3, -0.2, -0.1, 0.0, 0.1, 0.2, 0.3, 351 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, 1.0 352 ] 353 D = [ 354 -0.9602, -0.5770, -0.0729, 0.3771, 0.6405, 0.6600, 0.4609, 355 0.1336, -0.2013, -0.4344, -0.5000, -0.3930, -0.1647, -0.0988, 356 0.3072, 0.3960, 0.3449, 0.1816, -0.3120, -0.2189, -0.3201 357 ] 358 359 # 转换为PyTorch张量 360 X_tensor = torch.tensor(X, dtype=torch.float32).view(-1, 1) # 21×1 361 D_tensor = torch.tensor(D, dtype=torch.float32).view(-1, 1) # 21×1 362 363 # 初始化网络与训练参数 364 net = BPNetwork(hidden_size=hidden_size) 365 criterion = nn.MSELoss() # 均方误差损失 366 optimizer = optim.Adam(net.parameters(), lr=learning_rate) # 优化器 367 368 # 网络训练过程 369 loss_history = [] 370 for epoch in range(max_epochs): 371 O_tensor = net(X_tensor) # 前向传播 372 loss = criterion(O_tensor, D_tensor) # 计算损失 373 loss_history.append(loss.item()) 374 375 # 反向传播与参数更新 376 optimizer.zero_grad() 377 loss.backward() 378 optimizer.step() 379 380 # 提前停止条件 381 if loss.item() < target_loss: 382 break 383 384 # 结果预测 385 with torch.no_grad(): 386 O = net(X_tensor).numpy() 387 388 # 绘制结果 389 fig, axes = plt.subplots(1, 2, figsize=(14, 5)) 390 391 # 左图:原始数据点 392 axes[0].scatter(X, D, c='k', marker='*', label='原始数据') 393 axes[0].set_xlabel('X') 394 axes[0].set_ylabel('D') 395 axes[0].set_title('训练后原始数据图') 396 axes[0].set_xlim(-1.1, 1.1) 397 axes[0].set_ylim(-1.1, 1.1) 398 axes[0].grid(True) 399 axes[0].legend() 400 401 # 右图:拟合曲线 402 axes[1].scatter(X, D, c='k', marker='*', label='原始数据') 403 axes[1].plot(X, O, 'r-', linewidth=2, label='拟合曲线') 404 axes[1].set_xlabel('X') 405 axes[1].set_ylabel('输出') 406 axes[1].set_title('拟合函数图') 407 axes[1].set_xlim(-1.1, 1.1) 408 axes[1].set_ylim(-1.1, 1.1) 409 axes[1].grid(True) 410 axes[1].legend() 411 412 plt.tight_layout() 413 414 # 保存到缓冲区 415 buf1 = io.BytesIO() 416 plt.savefig(buf1, format='png', bbox_inches='tight', dpi=150) 417 buf1.seek(0) 418 result_img1 = Image.open(buf1) 419 420 # 绘制损失下降曲线 421 plt.figure(figsize=(8, 4)) 422 plt.plot(range(len(loss_history)), loss_history, 'b-') 423 plt.xlabel('迭代次数') 424 plt.ylabel('均方误差(MSE)') 425 plt.title('训练损失变化') 426 plt.grid(True) 427 428 # 保存到缓冲区 429 buf2 = io.BytesIO() 430 plt.savefig(buf2, format='png', bbox_inches='tight', dpi=150) 431 buf2.seek(0) 432 result_img2 = Image.open(buf2) 433 434 # 获取网络参数 435 hidden_weights = net.hidden_layer.weight.detach().numpy() 436 hidden_bias = net.hidden_layer.bias.detach().numpy() 437 output_weights = net.output_layer.weight.detach().numpy() 438 output_bias = net.output_layer.bias.detach().numpy() 439 440 params_text = f"===== 网络参数 =====\n" 441 params_text += f"输入层到隐含层权重:\n{hidden_weights.T}\n\n" 442 params_text += f"隐含层阈值:\n{hidden_bias}\n\n" 443 params_text += f"隐含层到输出层权重:\n{output_weights}\n\n" 444 params_text += f"输出层阈值:\n{output_bias}\n\n" 445 params_text += f"最终损失:{loss_history[-1]:.6f}\n" 446 params_text += f"训练迭代次数:{len(loss_history)}" 447 448 return result_img1, result_img2, params_text 449 450 451# ====================== Gradio界面 ====================== 452def create_interface(): 453 with gr.Blocks(title="神经网络算法实验平台") as demo: 454 gr.Markdown("# 神经网络算法实验平台") 455 with gr.Tabs(): 456 # 感知器实验 457 with gr.Tab("感知器算法"): 458 gr.Markdown("### 感知器二分类实验") 459 with gr.Row(): 460 with gr.Column(scale=1): 461 lr = gr.Slider(minimum=0.001, maximum=0.5, value=0.01, step=0.001, label="学习率") 462 epochs = gr.Slider(minimum=10, maximum=500, value=100, step=10, label="最大迭代次数") 463 perceptron_btn = gr.Button("运行感知器实验") 464 with gr.Column(scale=2): 465 perceptron_text = gr.Textbox(label="实验结果", lines=3) 466 perceptron_plot = gr.Image(label="感知器分类结果", height=400) 467 468 perceptron_btn.click( 469 fn=perceptron_demo, 470 inputs=[lr, epochs], 471 outputs=[perceptron_text, perceptron_plot] 472 ) 473 474 # BP神经网络 475 with gr.Tab("BP神经网络"): 476 gr.Markdown("### BP神经网络非线性函数逼近拟合") 477 with gr.Row(): 478 with gr.Column(scale=1): 479 hidden_size = gr.Slider(minimum=5, maximum=30, value=10, step=1, label="隐藏层神经元数量") 480 bp_lr = gr.Slider(minimum=0.001, maximum=0.1, value=0.01, step=0.001, label="学习率") 481 bp_max_epochs = gr.Slider(minimum=100, maximum=5000, value=1000, step=100, label="最大迭代次数") 482 target_loss = gr.Slider(minimum=0.001, maximum=0.01, value=0.005, step=0.001, label="目标损失") 483 bp_btn = gr.Button("运行BP神经网络实验") 484 with gr.Column(scale=2): 485 bp_plot1 = gr.Image(label="原始数据与拟合曲线对比", height=300) 486 bp_plot2 = gr.Image(label="训练损失变化曲线", height=300) 487 bp_params = gr.Textbox(label="网络参数与训练结果", lines=10) 488 489 bp_btn.click( 490 fn=train_bp_network, 491 inputs=[hidden_size, bp_lr, bp_max_epochs, target_loss], 492 outputs=[bp_plot1, bp_plot2, bp_params] 493 ) 494 495 # Hopfield网络实验 496 with gr.Tab("Hopfield网络"): 497 gr.Markdown("### Hopfield网络图像恢复实验") 498 with gr.Row(): 499 with gr.Column(scale=1): 500 noise_level = gr.Slider(minimum=0.0, maximum=0.5, value=0.1, step=0.05, label="噪声水平(0-50%)") 501 hopfield_btn = gr.Button("运行Hopfield网络实验") 502 with gr.Column(scale=2): 503 hopfield_plot = gr.Image(label="Hopfield网络三图对比结果", height=600) 504 505 hopfield_btn.click( 506 fn=hopfield_demo, 507 inputs=[noise_level], 508 outputs=[hopfield_plot] 509 ) 510 511 # AlexNet实验 512 with gr.Tab("AlexNet"): 513 gr.Markdown("### AlexNet图像分类实验 (CIFAR-10)") 514 with gr.Row(): 515 with gr.Column(scale=1): 516 alexnet_img = gr.Image(type="numpy", label="上传图像", height=200) 517 alexnet_btn = gr.Button("运行AlexNet分类") 518 with gr.Column(scale=2): 519 alexnet_text = gr.Textbox(label="分类结果", lines=2) 520 alexnet_plot = gr.Image(label="分类概率图", height=300) 521 522 alexnet_btn.click( 523 fn=lambda img: predict_image("AlexNet", img), 524 inputs=[alexnet_img], 525 outputs=[alexnet_text, alexnet_plot] 526 ) 527 528 # VGG实验 529 with gr.Tab("VGG"): 530 gr.Markdown("### VGG图像分类实验 (CIFAR-10)") 531 with gr.Row(): 532 with gr.Column(scale=1): 533 vgg_img = gr.Image(type="numpy", label="上传图像", height=200) 534 vgg_btn = gr.Button("运行VGG分类") 535 with gr.Column(scale=2): 536 vgg_text = gr.Textbox(label="分类结果", lines=2) 537 vgg_plot = gr.Image(label="分类概率图", height=300) 538 539 vgg_btn.click( 540 fn=lambda img: predict_image("VGG", img), 541 inputs=[vgg_img], 542 outputs=[vgg_text, vgg_plot] 543 ) 544 545 # ResNet实验 546 with gr.Tab("ResNet"): 547 gr.Markdown("### ResNet图像分类实验 (CIFAR-10)") 548 with gr.Row(): 549 with gr.Column(scale=1): 550 resnet_img = gr.Image(type="numpy", label="上传图像", height=200) 551 resnet_btn = gr.Button("运行ResNet分类") 552 with gr.Column(scale=2): 553 resnet_text = gr.Textbox(label="分类结果", lines=2) 554 resnet_plot = gr.Image(label="分类概率图", height=300) 555 556 resnet_btn.click( 557 fn=lambda img: predict_image("ResNet", img), 558 inputs=[resnet_img], 559 outputs=[resnet_text, resnet_plot] 560 ) 561 562 return demo 563 564# 启动应用 565if __name__ == "__main__": 566 demo = create_interface() 567 demo.launch(server_name="localhost", server_port=7860, share=False)

《基于 Gradio 构建神经网络 GUI 实验平台:感知器 / BP/Hopfield/AlexNet/VGG/ResNet 一站式实现》 是转载文章,点击查看原文。